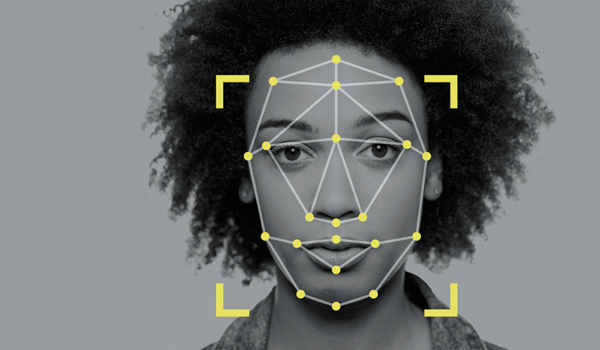

Suspending police use of facial recognition technology could 'allow crime to flourish'

As Amazon moves to ban police from using its facial recognition software and IBM ‘abandons’ the technology, there are concerns that limiting its use in law enforcement “will only allow crime to flourish”.

Amazon is to suspend police use of its facial recognition software for a year to allow governments to put in place “stronger regulations” governing use of the technology.

The Seattle-based company said its decision followed concerns about the “ethical use” of facial recognition technology.

Amazon’s announcement came a day after IBM’s decision to stop offering “general purpose facial recognition or analysis software” amid worries over mass surveillance and racial profiling.

And Microsoft has also said it will limit the use of its facial recognition technology by police. It says it will not “start sales” to US police departments until national regulations are in place, “grounded in human rights”, that govern use of the technology.

However, Amazon said it would continue to allow organisations such as the anti-child sex trafficking agency Thorn and the International Centre for Missing and Exploited Children to use its ‘Rekognition’ technology to “help rescue human trafficking victims and reunite missing children with their families”.

In a statement, Amazon said it was “implementing a one-year moratorium” on police use of its facial recognition technology.

It added: “We’ve advocated that governments should put in place stronger regulations to govern the ethical use of facial recognition technology, and in recent days, the US House of Congress appears ready to take on this challenge. We hope this one-year moratorium might give Congress enough time to implement appropriate rules, and we stand ready to help if requested.”

A number of US cities have already banned the use of facial recognition technology by police and other government agencies following privacy concerns.

Jason Sierra, sales director at Seven Technologies Group, agrees that while facial recognition is a vital tool for the police, it should be regulated and used within strict legal and ethical guidelines.

“It is completely understandable that the public is concerned about a powerful new technology that has been reported to be misused by bad actors,” he said. “But facial recognition can be a vital tool for police. It has helped law enforcement agencies across the globe, including in the US, UK and China, to bring murderers and violent criminals to justice, and to find people reported missing, including victims of human trafficking. The potential of facial recognition in tackling serious and violent crime cannot be ignored.

“The technology’s purpose is to help police forces prevent violent crime, arrest dangerous criminals quickly and to secure justice for victims of crime and their families. It is not intended to be used as a tool to discriminate or to perpetrate a biased agenda from people in positions of power against innocent parties. Ethical use of new technologies is imperative.

“We have an urgent need, here in the UK and across the globe, to govern and regulate the use of technologies that use artificial intelligence (AI) so that those who seek to use it for harmful purposes can be prevented and, if necessary, prosecuted.”

However, Mr Sierra warned: “Limiting use of facial recognition in law enforcement, where it is greatly needed to help put an end to human trafficking, kidnappings and serious and organised crime, will only allow crime to flourish and the effects will be felt by the public. National safety and security should not be compromised, but bad actors can be culled with strict legal and ethical regulations.

“Abandoning facial recognition is not the answer, but it is clear that we need ethical guidelines to determine appropriate use of the technology.”

In a letter to the US House of Congress, IBM chief executive officer Arvind Krishna said they would no longer condone the use of technology for “mass surveillance and racial profiling”.

While technology can increase transparency and help police protect communities, he said it “must not promote discrimination or racial injustice”.

“IBM no longer offers general purpose IBM facial recognition or analysis software,” said Mr Krishna. “IBM firmly opposes and will not condone uses of any technology, including facial recognition technology offered by other vendors, for mass surveillance, racial profiling, violations of basic human rights and freedoms, or any purpose which is not consistent with our values and ‘Principles of Trust and Transparency’.

“AI is a powerful tool that can help law enforcement keep citizens safe. But vendors and users of Al systems have a shared responsibility to ensure that Al is tested for bias, particularity when used in law enforcement, and that such bias testing is audited and reported.”

Mr Krishna added that national policy should encourage and advance uses of technology that bring “greater transparency and accountability” to policing, such as body cameras and modern data analytics techniques.

“We believe now is the time to begin a national dialogue on whether and how facial recognition technology should be employed by domestic law enforcement agencies,” he said.