Facebook removed 8.7 million pieces of child nudity

Using new artificial intelligence (AI) technology, Facebook has identified and removed almost nine million pieces of child exploitative content in the past three months.

Of the 8.7 million items removed, 99 per cent were taken down prior to anyone reporting the image.

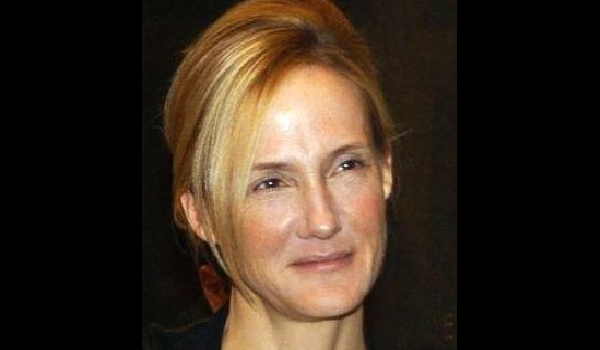

Antigone Davis, Global head of safety at Facebook, said the technology has been developed over the past year to specifically tackle child exploitation.

Additionally, the social network platform reports incidents to the National Centre for Missing and Exploited Children (NCMEC) and the two organisations have been partners since 2015.

Facebook said that it was helping the NCMEC develop software that prioritises the reports it shares with law enforcement to address the most serious cases first.

It adds that it is motivated to enhance a “systematic way” to protect children after an 11-year-old girl was rescued when a motel owner recognised her from an alert that a friend had shared on Facebook.

All images that are confirmed to be sexually explicit are then added to an image bank to improve the technology’s ability to identify other images of a related content.

More than 100 law enforcement agencies are in contact with Fa`cebook.

Ms Davis said: “Recently our researchers have been focused on classifiers to prevent unknown images, new images, using a nudity filter as well as signals to identify that it’s a minor. We are actually able to get in front of those images and remove them.”

Neil Basu, assistant commissioner of the Metropolitan Police Service, recently warned that websites should not rely on AI technology and that if incidents were not reported to the police then there was a real “gap in intelligence”.